Artificial Intelligence & the Government: Who’s Driving the Car?

By Emily Wolfteich, Senior Industry Analyst at Government Business Council

The GAO’s report on the federal government’s adoption of AI is as comprehensive as it can be – but do we like what we see?

Predictions based on seismic sensors and historical data of where the next earthquake will hit California. A sensor-based app to assist in physical therapy for wounded veterans. Automated detection of hazardous low clouds for air traffic safety. Across the federal government, federal agencies are looking for increasingly creative ways to use artificial intelligence in support of their mission, whether helping them query data, predict outcomes, communicate with the public, or automate repetitive tasks. About 1,241 use cases have been reported across 20 non-defense agencies. Now, after a year of review, the Government Accountability Office (GAO) has released an audit of these use cases and the state of AI in the government as a whole – and they have some suggestions.

Tracking AI use cases within the government is not new. In 2020, Executive Order 13960, Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government, mandated that most federal agencies publish a yearly inventory of their AI use cases, available for anyone to see at AI.gov. (For perhaps obvious reasons, defense and intelligence agencies are exempt from showing their hands.) Twenty-seven agencies are represented, with 710 examples (as of September 2023). These use cases vary widely, ranging from sea lion tracking to natural disaster predictions to chatbots, and they use an equally wide variety of AI techniques – robotic process automation (RPA), natural language processing (NLP), neural networks, and deep learning, among others. Yet the reporting of these examples, at least in its current iteration on AI.gov, is neither clear nor standardized regarding what is planned, predicted, in development, or currently in use. In other words, we can’t be sure who is using what, where they’re sourcing their technology, or what data they’re using in the process.

The GAO’s report – the first of its kind, examining both AI acquisition and use as well as the accuracy of agency reports and compliance with federal policy – aims to clear up the picture. It provides recommendations to help agencies standardize their reporting, saying in summary that “federal agencies have taken initial steps to comply with AI requirements in executive orders and federal law; however, more work remains to fully implement these.”

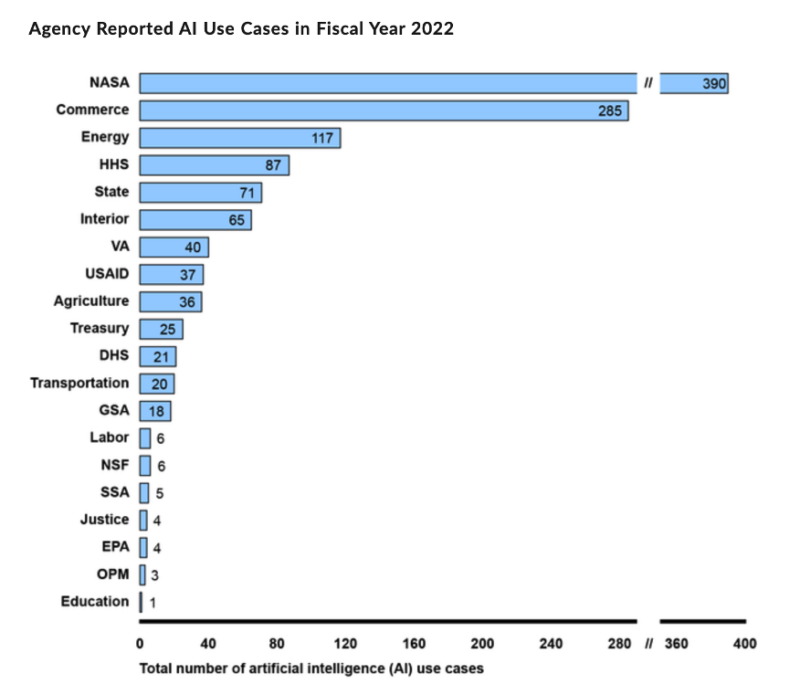

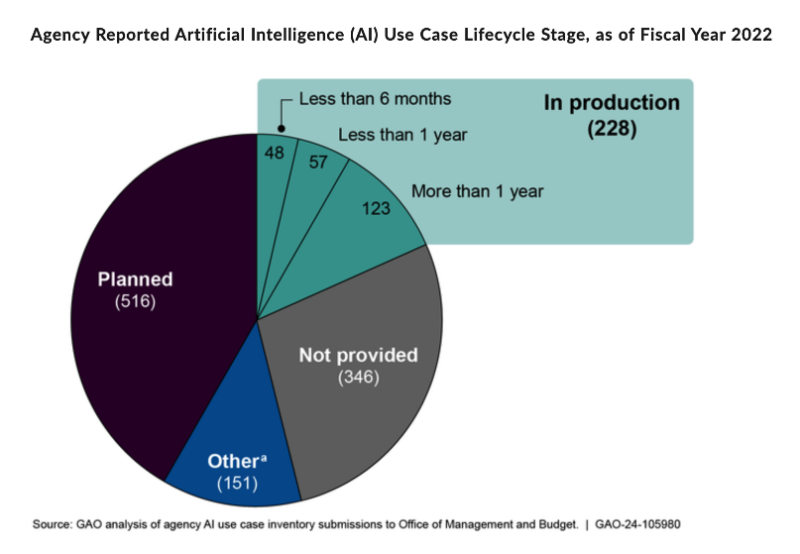

The report relied on agency submissions to the Office of Management and Budget to analyze the current state of AI within the government. The robustness of these use cases varies across agencies. NASA and the Department of Commerce have bounded ahead of the others (390 and 285 respectively) followed by the Departments of Energy (117), Health and Human Services (87) and State (71). The majority of these use cases (516) are planned, while only 228 are in production. Overall, the report provided 35 recommendations, particularly praising the work of Commerce and the General Services Administration (GSA).

However, the report also faced issues of incomplete or inaccurate data; out of twenty agencies, only five provided comprehensive reports for their use cases, meaning that for over three hundred use cases it was not possible to tell where they are within their life cycle or production timeline. These submissions also differ from the published use cases, in some cases fairly dramatically. NASA, for example, submitted almost four hundred use cases to OMB; their published use case list contains just 33. Some of these differences may be explained by methodology (NASA, for example, says these 33 are projects using AI tools they have developed in-house), but it still muddies public understanding of how agencies are actually using AI. Some may result from revised understandings within the agencies themselves — the GAO noted that two inventories included AI use cases that were later determined not to be AI at all.

Taking Control

It should be noted that things have changed rapidly since the report’s inception in April 2022. Most notably, the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence sets a new set of standards and guidelines for agencies to adhere to, including adoption of safeguards and further guidance for streamlined procurement. There have also been several government-wide pieces of guidance published, including the extensive (and evolving) AI Guide for Government, published in early December by the GSA’s Center of Excellence, that aim to build exactly that shared understanding. Yet is it quick enough?

“AI is driving the car whether we want it to or not,” says Kevin Walsh, director of IT and cybersecurity at GAO. “Is somebody going to take the wheel and put some guardrails around this thing, or is it going to keep doing what it wants?”

The Executive Order is a good start. It sets out guidelines and expectations. But as we’ve seen from the GAO report, there’s still a considerable amount of confusion within the federal government about what is and isn’t AI, and what is or isn’t expected of agencies to report – and know – about their own tools. And this matters – to minimize risk, for protecting data privacy, for regulations attempting to keep this powerful tool from getting out of hand. This car is getting more torqued up with each new innovation, and those are happening rapidly.

“Is somebody going to take the wheel and put some guardrails around this thing, or is it going to keep doing what it wants?”

– Kevin Walsh, director of IT and cybersecurity at GAO

But the laws and regulations that allow RPAs access to personal information to quickly triage tax requests cannot be the same that allow neural networks to “learn” and make predictions about who might be a terrorist threat, or a good tenant, given the cavernous chance that those algorithms may be tainted by bias. We need to understand exactly what’s being used and how we’re using it, and for that we need robust, specific definitions and regulations. There are so many exciting examples of how AI could change our world for the better. The VA, for example, is investing in tools that could help triage eye patients through simply a picture of their eye, predict surgical needs for those with Crohn’s disease, and are even pursuing a landmark project with the Department of Energy that could identify veterans at risk of suicide. AI has the capabilities to clean our waterways, improve our cities, and heal us wherever we are. But first we have to climb, quickly and definitively, into the driver’s seat.

To read additional thought leadership from Emily connect with her on LinkedIn.

Source, chart images: https://www.gao.gov/products/gao-24-105980

Related Posts

8 AI Trends to Watch in 2026

2026 is the year AI goes from bet to baseline in federal government. Explore 8 key AI trends, from institutionalization and operational reality to cybersecurity risks and DoD’s focus on reliable defense applications.

AI in December (2025)

A look at December’s top AI news in government: The House Task Force report, DHS’s DHSChat launch, and the White House’s 1,700+ federal AI use case inventory.

7 Ways BD & Sales Teams Can Use Federal Personas for Competitive Advantage

Explore 7 strategies for BD and sales teams to use research-based federal personas to sharpen pursuit strategy, tailor messaging, boost credibility, and gain a competitive edge in government contracting.

AI in August (2025)

Key AI news from August 2025: GSA launched USAi.Gov for federal AI adoption, the Pentagon’s Advana platform faced cuts and setbacks, and the Army tested smart glasses for vehicle maintenance. Also, the Department of Labor unveiled a national AI workforce strategy, and Colorado lawmakers began revising the state’s pioneering AI Act.

Deep Dive: Department of Treasury

A look inside Treasury’s 2026-era tech strategy: AI isn’t a standalone budget line — but ~$48.8 M funding for a centralized fraud-detection platform points to growing use of chatbots, generative AI pilots, taxpayer services, fraud monitoring, and data-driven automation under its IT-modernization efforts.

Decoding OMB Memorandums M-25-21 and M-25-22

Explains Office of Management and Budget (OMB) M‑25‑21 and M‑25‑22 — new federal‑AI directives that replace prior guidance, empower agencies to adopt AI faster, and streamline procurement, while aiming to balance innovation, governance and public trust.

News Bite: AI Slop, Jon Oliver, and (Literally) Fake News

Examines the rise of ‘AI slop’ — cheap, AI-generated content masquerading as real media — and how it’s fueling viral fake news, degrading digital discourse, and undermining trust online.

Deep Dive: Department of Defense

Overview of the Department of Defense’s FY 2026 budget: $961.6 billion total, with heavy investment in AI, unmanned aerial, ground, maritime, and undersea systems — spotlighting a modernization push across all domains.

AI in July (2025)

Federal AI Policy Heats Up in July: The Trump administration unveiled its “America’s AI Action Plan,” prompting a lawsuit over deregulation and a battle with states. Also featuring: a new defense bill with AI provisions and GSA’s $1 ChatGPT deal for federal agencies.

AI in Government: A Question of Trust

Explores how the use of AI by government agencies raises fundamental questions of trust — weighing the benefits of efficiency, fraud detection and streamlined services against serious risks around bias, transparency, accountability, and public confidence.

Insights at a Glance: May 2025

A data driven rundown of the latest federal AI, policy and government‑tech developments from May 2025.

AI in April (2025)

April’s AI news: New White House policies, controversial federal agency automation, military digital overhaul, the TAKE IT DOWN Act, and plans to integrate AI in K-12 education.

Insights at a Glance: March 2025

A data driven rundown of the latest federal AI, policy and government‑tech developments from March 2025.

Policy Dive: AI in the First Week of Trump

Covers the first‑week AI moves by the new administration — from revoking prior federal AI safeguards to launching a sweeping AI‑domination agenda that prioritizes innovation and global competitiveness over prior guardrails.

AI in January (2025)

Explore the new Trump administration’s deregulatory shift, the massive “Stargate Project” with tech giants, the emergence of a high-performing, cost-effective Chinese AI model (DeepSeek), the launch of OpenAI’s ChatGPT Gov, and key ethical priorities set by the NAIAC.

How government can experience the Great Stay

As the labor market begins to stabilize, experts predict FY 2024 to be the year of “The Great Stay” among the federal workforce.

AI & the Pentagon: Cautiously Curious

As AI hype increases across the public and private sectors, organizations are weighing the possibilities (and risks) the tech creates.

AFA’s Air Space & Cyber Conference 2023: Key Takeaways and Insights

Key takeaways from David Hutchins (Government Business Council) and Jon Hemler (Forecast International) on the AFA’s 2023 Air Space & Cyber Conference.

How the Federal Government Can Attract Employees

As the federal workforce ages, attracting young talent is critical. Taking these 10 actions can help attract the next generation.

Top Cybersecurity Trends in the Federal Government and Why They are Important

As cybersecurity tech, frameworks, and standards evolve, there are many trends driving cyber investments within the federal sector in 2023.