News Bite: AI Slop, Jon Oliver, and (Literally) Fake News

Written by Emily Wolfteich

Senior Industry Analyst

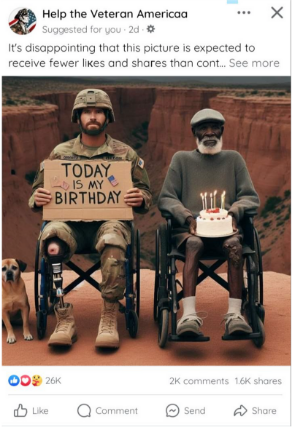

Anyone with an online presence has probably seen a picture or two (or ten). Shrimp Jesus. Catchy videos of awkward text messages, set to banal country songs. Videos or pictures of kittens and children that are a little soft around the edges. Perhaps you noticed right away that they were AI, or maybe something just seemed a little off to you. Or perhaps you didn’t notice at all.

Generative AI tools are incredibly simple to use. All it takes is a few keywords and there’s an image of anything you like – a strikingly lifelike image of Kermit the Frog as painted by Edvard Munch, for example, or the Pope in a Balenciaga puffer jacket. In many cases, it is very clear that the image is generated (again, Shrimp Jesus). But as AI tools become increasingly sophisticated, and the subject of the content becomes more realistic, identifying what is real and what is AI is a more concerning challenge.

The Rise of the Slop

Creating Fake News – For Cheap

What Now?

Expert’s views of AI slop’s implications are mixed. Some see it as a turbocharged tool for propaganda machines, but not inherently an AI problem. Others argue that AI slop is more spam than misinformation, akin to chain emails or other viral social media posts, that platforms will learn to sort out and control in time. Despite many AI images of politicians before the 2024 election, the deepfake apocalypse that many feared did not materialize, though there was evidence of AI bot campaigns. (The question of deepfakes deserves a deeper dive, given their role in increasing waves of cybercrime.)

Some state governments are trying to contain the worst of the spread. Alabama, Colorado, New Hampshire, New Mexico, and Oregon have enacted legislation banning deepfakes, “fraudulent representations” or “materially deceptive media” in elections; California is considering an AI watermark bill that would require AI-generating entities to include digital content provenance. However, when it comes to sharing news on social media, platforms say there is little they can do until their models are better trained to recognize the content.

Meanwhile, AI slop is everywhere. A new study by AI detection startup Originality AI found that over half of long-form, English-language posts on LinkedIn are likely AI-generated. Oliver’s piece discusses the complete takeover of visual site Pinterest by AI-generated home decor and outfits. The sheer volume of fake images is even distorting search results, leading to moments like Google serving up as a top result an AI slop version of one of the most famous paintings in history, Hieronymus Bosch’s The Garden of Good and Evil.

The consequences of more and more of our digital spaces being consumed by literal fake news range from erosion of trust in journalism to more opportunities for fraud, even posing national security concerns as more “local news” is owned and operated by foreign actors. For now, at least, we hope that no one is fooled by Shrimp Jesus.

The consequences of more and more of our digital spaces being consumed by literal fake news range from erosion of trust in journalism to more opportunities for fraud, even posing national security concerns as more “local news” is owned and operated by foreign actors. For now, at least, we hope that no one is fooled by Shrimp Jesus.

To read additional thought leadership from Emily, connect with her on LinkedIn.

Related Posts

AI in December (2025)

A look at December’s top AI news in government: The House Task Force report, DHS’s DHSChat launch, and the White House’s 1,700+ federal AI use case inventory.

7 Ways BD & Sales Teams Can Use Federal Personas for Competitive Advantage

Explore 7 strategies for BD and sales teams to use research-based federal personas to sharpen pursuit strategy, tailor messaging, boost credibility, and gain a competitive edge in government contracting.

AI in August (2025)

Key AI news from August 2025: GSA launched USAi.Gov for federal AI adoption, the Pentagon’s Advana platform faced cuts and setbacks, and the Army tested smart glasses for vehicle maintenance. Also, the Department of Labor unveiled a national AI workforce strategy, and Colorado lawmakers began revising the state’s pioneering AI Act.

Deep Dive: Department of Treasury

A look inside Treasury’s 2026-era tech strategy: AI isn’t a standalone budget line — but ~$48.8 M funding for a centralized fraud-detection platform points to growing use of chatbots, generative AI pilots, taxpayer services, fraud monitoring, and data-driven automation under its IT-modernization efforts.

Decoding OMB Memorandums M-25-21 and M-25-22

Explains Office of Management and Budget (OMB) M‑25‑21 and M‑25‑22 — new federal‑AI directives that replace prior guidance, empower agencies to adopt AI faster, and streamline procurement, while aiming to balance innovation, governance and public trust.

Deep Dive: Department of Defense

Overview of the Department of Defense’s FY 2026 budget: $961.6 billion total, with heavy investment in AI, unmanned aerial, ground, maritime, and undersea systems — spotlighting a modernization push across all domains.

AI in July (2025)

Federal AI Policy Heats Up in July: The Trump administration unveiled its “America’s AI Action Plan,” prompting a lawsuit over deregulation and a battle with states. Also featuring: a new defense bill with AI provisions and GSA’s $1 ChatGPT deal for federal agencies.

AI in Government: A Question of Trust

Explores how the use of AI by government agencies raises fundamental questions of trust — weighing the benefits of efficiency, fraud detection and streamlined services against serious risks around bias, transparency, accountability, and public confidence.

Insights at a Glance: May 2025

A data driven rundown of the latest federal AI, policy and government‑tech developments from May 2025.

AI in April (2025)

April’s AI news: New White House policies, controversial federal agency automation, military digital overhaul, the TAKE IT DOWN Act, and plans to integrate AI in K-12 education.

Insights at a Glance: March 2025

A data driven rundown of the latest federal AI, policy and government‑tech developments from March 2025.

Policy Dive: AI in the First Week of Trump

Covers the first‑week AI moves by the new administration — from revoking prior federal AI safeguards to launching a sweeping AI‑domination agenda that prioritizes innovation and global competitiveness over prior guardrails.

AI in January (2025)

Explore the new Trump administration’s deregulatory shift, the massive “Stargate Project” with tech giants, the emergence of a high-performing, cost-effective Chinese AI model (DeepSeek), the launch of OpenAI’s ChatGPT Gov, and key ethical priorities set by the NAIAC.

Artificial Intelligence & the Government: Who’s Driving the Car?

The GAO’s report on the federal government’s adoption of AI is as comprehensive as it can be – but do we like what we see?

How government can experience the Great Stay

As the labor market begins to stabilize, experts predict FY 2024 to be the year of “The Great Stay” among the federal workforce.

AI & the Pentagon: Cautiously Curious

As AI hype increases across the public and private sectors, organizations are weighing the possibilities (and risks) the tech creates.

AFA’s Air Space & Cyber Conference 2023: Key Takeaways and Insights

Key takeaways from David Hutchins (Government Business Council) and Jon Hemler (Forecast International) on the AFA’s 2023 Air Space & Cyber Conference.

How the Federal Government Can Attract Employees

As the federal workforce ages, attracting young talent is critical. Taking these 10 actions can help attract the next generation.

Top Cybersecurity Trends in the Federal Government and Why They are Important

As cybersecurity tech, frameworks, and standards evolve, there are many trends driving cyber investments within the federal sector in 2023.

Top 5 Supply Chain Issues in the Federal Government… and What’s Being Done About it

This article discusses supply chain disruptions and their impact on the federal government, businesses, and society.